Date: Wed, 2 Mar 2016 22:19:46 +0000

Hi Vittorio, thanks for answering

About scenario and parameters:

Electrons travel through a 10m “vacuum” (1 atm air) pipe and are deflected by a magnetic field. In this path they generate bremsstrahlung, which goes through a vacuum pipe and then hits against some equipment.

In that “air” pipe, multiple scattering is suppressed (MULSOPT card); furthermore, I’m using default PRECISIO, RANDOMIZ card, ignoring Rayleigh scattering in all regions (by the way, what’s the difference between “ignore” and “nothing” at EMFRAY card?), activating all gamma interactions with nuclei (PHOTONUC) and considering a production cut-off (EMFCUT) of 1keV for photons and 500keV for electrons.

About the two steps simulation,

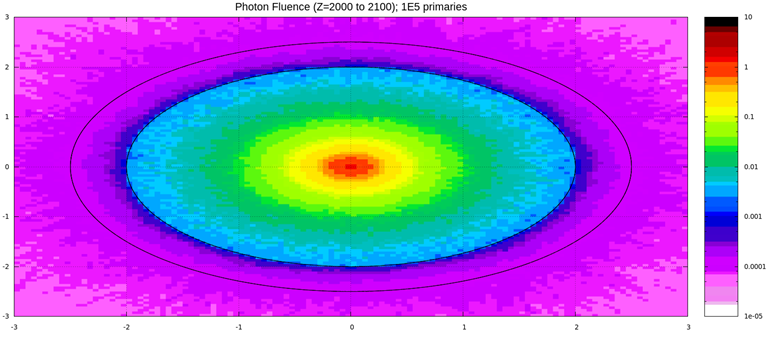

The attached figure shows the fluence of photons in this pipe 1500cm downstream the end of the air path (and 8m upstream the hit point). So, the idea is use such spectra as input for next simulations.

The challenge is editting source.f, changing parameters for beam position (XFLK, YFLK and ZFLK) and parameters for weight (WTFLK (NPFLKA)) and energy (TXFLK (NPFLKA)), perhaps inside a DO loop in order to insert entire spectrum.

For a first test, I kept energy and weight constant and I’ve tried to solve the position issue using the code below, considering a Gaussian distribution in this Ø40mm pipe (see random.png). However, after compile* and open at Flair/Run, the simulation does not run (“Timed-out” or “finish with errors”).

RandA = FLRNDM(XDUMMY)

RandB = FLRNDM(XDUMMY)

PosX=0.598413*EXP(-RandA*RandA/0.88889)

SigY=SQRT(0.2*0.2-PosX*PosX)

PosY=(1/SQRT(2*3.14159*SigY*SigY))* EXP(-RandB*RandB/(2*SigY*SigY))

XFLK (NPFLKA) = PosX

YFLK (NPFLKA) = PosY

ZFLK (NPFLKA) = 1500

Instead of:

XFLK (NPFLKA) = XBEAM

YFLK (NPFLKA) = YBEAM

ZFLK (NPFLKA) = ZBEAM

==============================

*compilation until it is successfully made:

export FLUFOR=gfortran

cd $FLUPRO

$FLUPRO/flutil/fff $FLUPRO/flutil/s160302.f

$FLUPRO/flutil/lfluka -o source20160302 -m fluka $FLUPRO/flutil/source20160302.o

De: Vittorio Boccone [mailto:dr.vittorio.boccone_at_ieee.org]

Enviada em: terça-feira, 1 de março de 2016 17:46

Para: Marlon Saveri Silva <marlon.saveri_at_lnls.br>

Cc: fluka-discuss_at_fluka.org; Trinh Ngoc-Duy <Ngoc-Duy.Trinh_at_ganil.fr>; Jeferson de Souza <jeferson.souza_at_cnpem.br>; Allan Gilmour Anderson Junior <allan.gilmour_at_lnls.br>

Assunto: Re: RES: [fluka-discuss]: RE:Estimating hardware configurations for bremsstrahlung simulations

Hi Marlon,

it depends how large is the simulation that you are running. If you have a large beam-line in air you could decide to kill the low energy transport in a certain region of space far from where you need to get the results in order to spare computing power. You haven’t been very specific about your simulation parameters. Could you describe briefly your simulation scenario?

A two step simulation could also be a solution in some case. Personally my approach would be to dump the simulated particle status at a certain interface, together with their weight) and then sample the spectra as a function of the surface from there (mind that you might have to deal also with the particle cos. directors. You would have large file yes but still in the GB size which is rather small for this kind of studies.

A two step simulation typically requires a high degree of understanding of your simulation and the analysis code you use to do the magic between step 1 and 2, and might need more script/programming time than the actual run :D.

Cheers,

V.

On Mar 1, 2016, at 9:20 PM, Marlon Saveri Silva <marlon.saveri_at_lnls.br<mailto:marlon.saveri_at_lnls.br>> wrote:

Still about Rick answer,

Is there any card better than USRBDX for doing such job? USRBDX give us spectrum and, making some approximations, directions of the particles crossing the boundary between two regions; however it does not show the position where each particle crosses such boundary, what we also need when using them as input. Thus, some card showing position, direction, type of particle and its energy when crossing the boundary would be perfect. Otherwise, I’ll insist with USRBDX using some concentric vacuum pipes in order to estimate the position of that particles.

De: Vittorio Boccone [mailto:dr.vittorio.boccone_at_ieee.org]

Enviada em: segunda-feira, 29 de fevereiro de 2016 17:18

Para: Marlon Saveri Silva <marlon.saveri_at_lnls.br<mailto:marlon.saveri_at_lnls.br>>

Cc: Marlon Saveri Silva <marlon.saveri_at_lnls.br<mailto:marlon.saveri_at_lnls.br>>; fluka-discuss_at_fluka.org<mailto:fluka-discuss_at_fluka.org>; Trinh Ngoc-Duy <Ngoc-Duy.Trinh_at_ganil.fr<mailto:Ngoc-Duy.Trinh_at_ganil.fr>>

Assunto: Re: [fluka-discuss]: RE:Estimating hardware configurations for bremsstrahlung simulations

Hi Marlon,

as already mentioned you can split your job in different subjoins by SPAWNING it with flair and turn a process per each core (o virtual core) you have on your machine (or virtual machine). You can run also also run the job on other machine simultaneously but remember to use different random seed numbers otherwise you will loose the statistical significance.

In many labs you have linux cluster for machine and physics calculations, so you probably might find some more (hopefully a lot more) computing power to run you simulation. You might need to ask your sysadmins get FLUKA up and running, but it’s worth the effort. Technically speaking you would need about 1GB per core, which is a common memory per core density (on the lower side) in typical machine.

Best,

V

On Feb 26, 2016, at 6:46 PM, Trinh Ngoc-Duy <Ngoc-Duy.Trinh_at_ganil.fr<mailto:Ngoc-Duy.Trinh_at_ganil.fr>> wrote:

Hi,

In fact when you spawn your simulation into n job in Flair, it's mean that Flair will use n core of your computer, each core of your computer do one simulation. So it's a parallel calculation. But this technique can work only with n < Number of cores of your computer.

So now if you want to run FLUKA on more cores, it means that you need to connect difference computer to make a parallel calculation network. This mean that your simulation win run on differents cores of differents computers. In this case, you need special configuration. For me, i don't know how to do it.

You can estimate the time to run your simulation by comparing the value of FLOPS of your CPU with the other one. More FLOPS means faster calculation.

For about the Rick answer, i'm also want to do a technique like that, but it's seam quite difficult when dealing with angular distribution, energy distribution...

Best regards.

Trinh.

________________________________

De : Marlon Saveri Silva [marlon.saveri_at_lnls.br<mailto:marlon.saveri_at_lnls.br>]

Envoyé : vendredi 26 février 2016 17:48

À : Trinh Ngoc-Duy; fluka-discuss_at_fluka.org<mailto:fluka-discuss_at_fluka.org>

Objet : RES: Estimating hardware configurations for bremsstrahlung simulations

Thanks for answering. I didn’t tried use Linux directly, just because I’m using windows for other works at the same time, but seems to be a good idea.

Well, actually I don’t know how Fluka is really running. When I use some spawns I see in the System Monitor all processors (and memory) being used. So, I supposed it was in parallel (number of spawns in Flair = number of processors (or cores) in parallel?), but according to http://willworkforscience.blogspot.com.br/2012/01/quick-and-dirty-guide-for-parallelizing.html, it’s said that FLUKA runs in “serial”; so, I didn’t understand what he meant with “serial”.

About these different cores… Does it mean that I need run fluka through another software or code different from Flair, programming a task division?

About the computational time, once I know it takes 8 days for 1E8 cycles for our present hardware configuration, is it possible to estimate the number of processors/cores/memory in order to run in 1 day?

And, finally, about Rick answer; it seems to be an amazing solution. We’re looking at gas bremsstrahlung and neutrons. What’s the easy way to import such spectra (outputs of the initial run for neutrons and photons) and use as input for other runs? Is there some automatic tool or do you suggest using a USRBDX card, get the spectra, save in a .dat and then open them by source.f file? Is this second solution able to deal with all parameters of this new source (different weights, angular directions)?

-----Mensagem original-----

De: owner-fluka-discuss_at_mi.infn.it<mailto:owner-fluka-discuss_at_mi.infn.it> [mailto:owner-fluka-discuss_at_mi.infn.it] Em nome de akardeep_at_barc.gov.in<mailto:akardeep_at_barc.gov.in>

Enviada em: sexta-feira, 26 de fevereiro de 2016 09:10

Para: Marlon Saveri Silva fluka-discuss_at_fluka.org<mailto:fluka-discuss_at_fluka.org> <fluka-discuss_at_fluka.org<mailto:fluka-discuss_at_fluka.org>>

Assunto: Re: [fluka-discuss]: RE:Estimating hardware configurations for bremsstrahlung simulations

you can spawn the run that assigns different cycles of a run to different cores.

De: Rick Donahue [mailto:rjdonahue_at_lbl.gov]

Enviada em: quinta-feira, 25 de fevereiro de 2016 18:37

Para: Marlon Saveri Silva <marlon.saveri_at_lnls.br<mailto:marlon.saveri_at_lnls.br>>

Assunto: Re: [fluka-discuss]: Estimating hardware configurations for bremsstrahlung simulations

Marlon,

You mentioned 1 atm so I’m guessing you’re looking specifically at gas brem. If so then maybe you only need one

initial run with e- to get the downstream brem spectrum. Subsequent runs can just sample this brem spectrum as

the source. You can normalize results per watt of brem power. If you start w/ e- in each run then you’re using

a lot of computing time calculating the same brem spectrum over and over. Good luck.

—Rick

De: Trinh Ngoc-Duy [mailto:Ngoc-Duy.Trinh_at_ganil.fr]

Enviada em: sexta-feira, 26 de fevereiro de 2016 05:36

Para: Marlon Saveri Silva <marlon.saveri_at_lnls.br<mailto:marlon.saveri_at_lnls.br>>; fluka-discuss_at_fluka.org<mailto:fluka-discuss_at_fluka.org>

Assunto: RE:Estimating hardware configurations for bremsstrahlung simulations

Dear Marlon Saveri Silva,

From my experiences, i see that when i run heavy program on a virtual machine, the performance of CPU is slow down (RAM does not play a big role there- your 11 GB is already big). Have you ever try to install Linux on your machine and run FLUKA directly within this ?

From your e-mail, i suppose that you run FLUKA on parallel on difference cores of your machine. If you want to run FLUKA on supercomputer, remind that you will have to run FLUKA on difference cores of difference CPU of supercomputer. The task will be more complicated. I never tried it before.

The computational time depend in fact on each simulation, so you have to estimate it for each job. It's difficult to choose one computational parameter for all job.

Best regards.

Ngoc Duy TRINH, GANIL, France

________________________________

De : owner-fluka-discuss_at_mi.infn.it<mailto:owner-fluka-discuss_at_mi.infn.it> [owner-fluka-discuss_at_mi.infn.it<mailto:owner-fluka-discuss_at_mi.infn.it>] de la part de Marlon Saveri Silva [marlon.saveri_at_lnls.br<mailto:marlon.saveri_at_lnls.br>]

Envoyé : jeudi 25 février 2016 20:30

À : fluka-discuss_at_fluka.org<mailto:fluka-discuss_at_fluka.org>

Objet : [fluka-discuss]: Estimating hardware configurations for bremsstrahlung simulations

Dear Fluka experts,

I’m working with some bremsstrahlung simulations for synchrotron beamlines in order to check dose absorbed by some components and equivalent dose in some regions. I’m already applying 1atm inside electrons pipe and using that recommended biasing techniques (from ADONE example) to make faster simulation. Ergo, In order to get USRBIN statistic better than 20%, I need at least 1E8 primaries. Using 11GB RAM and 7 Intel Xeon 3.2GHz processors in a Ubuntu Virtual Machine mounted at Windows 7 64bits, 4 cycles and 6 SPAWNS, it spends more than 8 days, generating more than 6Gb of files.

I believe some of you have already done such kind of study and could enlighten me about the best hardware configuration for such case; for example, what do we need for the purpose of running that simulation, with 5E8 primaries, in 1 day.

Since there’re lots of cases to simulate (and some requires more than 1E8 primaries), I intend to rent space in a supercomputer from another institution. In order to accomplish this, I need to fill a form telling them the computational parameters I need (number of cores, processors, hd space, parallelism technique, ram memory…) and the number of hours of simulation.

Regards,

Marlon Saveri Silva

Mechanical Engineer

Beamlines Instrumentation and Support Group – SIL

Brazilian Synchrotron Light Laboratory– LNLS – CNPEM

________________________________

Préservons notre environnement, n’imprimez ce mail que si nécessaire.

Preserve our environment, print this email only if necessary.

________________________________

Préservons notre environnement, n’imprimez ce mail que si nécessaire.

Preserve our environment, print this email only if necessary.

__________________________________________________________________________

You can manage unsubscription from this mailing list at https://www.fluka.org/fluka.php?id=acc_info

(image/png attachment: Usrbin.png)

(image/png attachment: Random.PNG)